Research

Edge AI Test Bed

This project aims to define a distributed software architecture that allows to optimize the distribution of machine learning (ML) inference tasks, in systems characterized by wireless connectivity and heterogeneity in hardware, software, and workloads. By taking advantage of edge-based service mesh mechanisms, we investigate how to efficiently orchestrate the ML inference provisioning between resource-constrained edge nodes and IoT end-devices. Orchestration rules take into account several factors, including the trade-off between ML tasks latency and network latency, the available computational capabilities of the edge nodes, the availability of multiple network interfaces, etc. Finally, in this project we explore also how to leverage gossip and distributed consensus protocols with federated learning approaches, in order to further optimize the inference efficiency in the aforementioned scenarios.

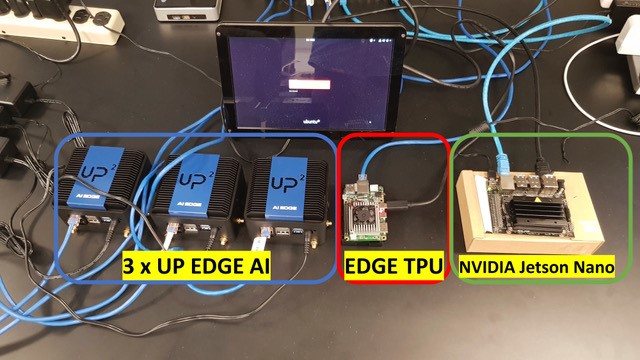

The implemented software architecture is tested through the Princeton EdgeAI testbed.

The EdgeAI testbed embeds several resource-constrained edge nodes, including: